Efficiency Unleashed: Navigating Time and Billing with Filevine

Back to all articles Company Product Name Filevine Latest Developments and Upcoming Updates Recently unveiled Payments by Filevine is a potent in-house

Manny emphasized Microsoft’s deep commitment to complying with all applicable regulations globally, including the EU AI Act. “It’s exciting to see how our technologies—particularly through our shared responsibility model—support customers in being cyber-resilient and AI-ready,” he noted. Manny highlighted how Microsoft invests in deep-dive compliance commitments—found in the Microsoft Trust Center. and new content around the EU AI Act—to help customers see exactly what Microsoft does to secure the underlying cloud infrastructure and what customers need to do to meet their obligations.

In a world where Artificial Intelligence (AI) shapes everything from personalized recommendations to critical infrastructure, compliance with expanding regulations can feel like the “bogeyman” lurking in every boardroom. This sentiment is especially true as the EU AI Act, alongside regulations like GDPR, NIS2, DORA, and CCPA/CPRA, continues to redefine the global compliance landscape.

The evolving regulatory frameworks surrounding artificial intelligence (AI) are poised to reshape how organizations approach compliance and governance. The European Union’s AI Act represents a pioneering stride in AI oversight, establishing the first-of-its-kind regulatory framework specifically designed for AI technologies. This landmark legislation is expected to set a global precedent for AI governance, influencing regulatory approaches beyond the borders of Europe.

Much like the far-reaching impact of the General Data Protection Regulation (GDPR), the influence of the EU AI Act is anticipated to extend worldwide. Governments across the globe may look to this framework as a model for their own AI regulations. As a result, organizations operating internationally—or even those with indirect exposure to European markets—may find themselves needing to adapt to new compliance requirements, regardless of their geographic location.

The stakes for noncompliance with emerging AI regulations are notably high. Financial penalties, regulatory investigations, and reputational damage can swiftly erode an organization’s goodwill. The risks are not limited to deliberate misconduct; inadvertent violations pose significant challenges as well. For example, AI systems can give rise to unforeseen issues such as algorithmic collusion, which many organizations are currently ill-equipped to address.

Despite these challenges, robust compliance with AI regulations offers tangible business benefits. Organizations that demonstrate alignment with stringent regulatory standards can achieve competitive differentiation, bolstering customer trust and enhancing their brand reputation. Furthermore, cultivating a strong compliance posture often leads to improved data governance practices and greater operational efficiency, as businesses streamline processes to meet regulatory requirements. In this way, proactive engagement with evolving AI regulations can serve not only as a risk management strategy but also as a catalyst for operational excellence.

At the heart of our security and compliance offerings is Microsoft Purview, which operates under a shared responsibility model:

Microsoft’s Role

Customer’s Role

By leveraging Purview within this shared responsibility model, businesses can more effectively automate compliance, monitor data flow, and stay informed about changes in regulations.

A holistic compliance strategy is fundamental for organizations leveraging AI technologies. This strategy should be cross-functional, involving the establishment of a dedicated AI governance committee. This committee must include representatives from IT, legal, compliance, and business leadership to ensure diverse perspectives and comprehensive oversight. Additionally, organizations should adopt a risk-based approach, identifying AI use cases that pose the highest compliance risks and allocating resources strategically to mitigate these risks effectively.

Investing in security and resilience is another critical pillar. Organizations need to implement robust data classification and discovery processes to maintain a clear understanding of the data their AI systems utilize, especially when dealing with sensitive information. Furthermore, incident response planning must be thorough and coordinated, involving legal and technical teams to develop clear communication protocols for managing breaches or responding to regulatory inquiries promptly and efficiently.

Ethical AI design is essential to foster trust and fairness. Continuous bias detection and mitigation are necessary to prevent unfair discrimination, particularly in sensitive sectors such as lending, hiring, and insurance. Equally important is ensuring explainability and transparency in AI systems. Organizations should meticulously document how these systems make decisions, with special attention to “high-risk” applications as outlined under the EU AI Act.

Staying agile with evolving laws is crucial in the dynamic regulatory landscape. Organizations must actively monitor regulatory updates, such as developments related to the EU AI Act, including enforcement timelines and new guidance documents. Leveraging vendor expertise can also be highly beneficial. Major cloud providers, like Microsoft, offer valuable compliance and security resources that can help accelerate an organization’s readiness and adaptability to regulatory changes.

While AI regulations can appear like an insurmountable “bogeyman,” they also provide clear pathways for building resilient, ethical, and customer-trusted businesses. By adopting a holistic compliance framework, investing in automation and risk mitigation, and working in tandem with trusted partners like Microsoft, organizations can harness AI’s potential responsibly—and confidently.

At LegalWeek 2025, we hosted a session titled “Global Compliance Deep Dive: Mastering the EU AI Act and International Data Regulations.” Our goal? To shine a light on the so-called “bogeyman” and show that compliance, when approached thoughtfully, can actually be a powerful enabler of innovation and trust. Below, we share our panel’s collective insights, Microsoft’s commitment to regulatory alignment, and actionable steps for navigating today’s AI-driven environment.

The session included insights from our LegalWeek panel:

Focus: AI & Antitrust, Government investigations

Perspective: Jennifer’s extensive background in antitrust law brings a critical lens to the conversation around AI’s potential to facilitate unintentional market collusion or unfair data aggregation. “There’s a misconception that if AI automates pricing or distribution, companies are off the hook for compliance,” she explained. “In reality, regulators are increasingly prepared to hold businesses responsible for how algorithms behave.” Jennifer stressed continuous oversight and tailored training to ensure that AI-driven systems don’t breach competition laws.

Focus: EU AI Act, GDPR, Data Act, Data Privacy

Perspective: Based in San Francisco but qualified in Germany, Dajin underscored the ecosystem of EU digital regulations—the AI Act must be read alongside GDPR, NIS2, and the Data Act. She recommends companies adopt a holistic approach: “Separating AI compliance from privacy compliance can create dangerous gaps,” Dajin cautioned. “A risk-based classification of AI systems, coupled with robust privacy-by-design principles, is your best bet for meeting these interlocking standards.”

Focus: EUGlobal Data Protection, incident response, operationalizing compliance

Perspective: Patrick has shepherded Fortune 200 organizations through data breaches and multi-jurisdictional compliance expansions. He advocates for automating as many compliance tasks as possible—such as data discovery and cross-border transfers—to avoid “compliance drift.” “When businesses handle troves of data across multiple regions, relying on manual processes is a recipe for noncompliance,” he said. “Tools that unify data mapping, classify sensitive information, and track regulatory changes can make the difference between success and a very public compliance failure.”

Focus: EUBridging Tech & Legal, Ethical AI, IP & Machine Learning

Perspective: As a former machine learning engineer, Laila offered a unique perspective on building compliance into AI systems from the ground up. “You can’t add compliance retroactively without incurring major costs—both technical and reputational,” she emphasized. Generative AI raises additional concerns around IP, training data provenance, and potential biases. “Technical teams and legal teams need a common language and joint accountability to ensure AI solutions respect not only the law but also ethical considerations.”

Microsoft Trust Center

Deep Dive on Microsoft’s compliance commitments and best practices for secure cloud adoption. Learn more about the EU AI Act and Microsoft’s customer commitments on our Microsoft Trust Center page.

Purview Compliance Manager

For real-time oversight of your compliance posture across Azure, Microsoft 365, and hybrid environments.

Blog and updates from Natasha Crompton, Chief Responsible AI Officer

Stay informed on Microsoft’s stance regarding responsible AI.

Compliance Program for Microsoft Cloud (CPMC)

Delivers guidance, best practices, and sector-specific roadmaps for aligning with AI and data protection laws.

Back to all articles Company Product Name Filevine Latest Developments and Upcoming Updates Recently unveiled Payments by Filevine is a potent in-house

Back to all articles Company Product Name TimeSolv by profitsolv Latest Developments and Upcoming Updates New reports based on the Logi

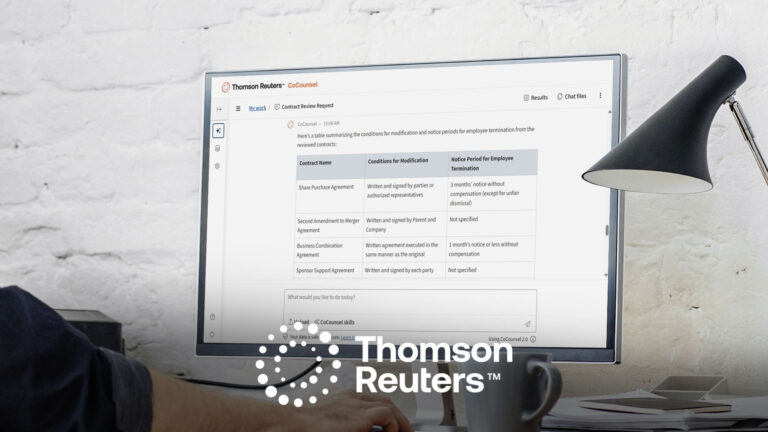

Back to all articles Company Product Name CoCounsel CoCounsel Drafting Latest Developments and Upcoming Updates Single point of access to CoCounsel

Back to all articles Company Product Name Filevine Latest Developments and Upcoming Updates New and evolving AI (artificial intelligence) tools will